Artificial Intelligence in Test Automation is currently a hot topic in the UI test automation community, but what does it mean for testing, and what are its goals or outcomes?

In this blog, Richard Clark, CTO of Provar, shares some reflections on what we mean by Artificial Intelligence in Test Automation, how tools are (or aren’t!) using this technology, and how we’re incorporating its principles here at Provar.

Why Artificial Intelligence in Test Automation is Popular?

With the UI Test Automation Community, there has been a big drive in the last 18 months to have self-healing and AI-based testing.

There may be various reasons that tools have taken this path, including:

- Their default locators are not robust, and test cases break when UI changes are made by either developer, admins, or vendor application releases/patches.

- The locators are binding to ID elements that are auto-generated by the application being tested. They vary across environments (e.g., for Salesforce.com object IDs and custom field IDs) and even across changes in the same environment (e.g., Salesforce Visualforce j_id values, Aura Ids, Lightning page refresh).

- Their locators depend on multiple locators, which slows down test execution as they trip through a hierarchy of ways to locate a screen element.

- Their locators don’t understand different page layouts, user experience themes, field-level security, picklist values, or record variants.

- There is more interest from investors in artificial intelligence in test automation.

So What Do These Other Test Tools Do, and What Specific “AI” Do They Have?

The tools generally apply one or more of the following:

- A record and playback function to capture the clicks and data entry from a human user and allow that data to be re-used for multiple test scenarios. This initial recording is often brittle and provides a false belief that the test cases are written more quickly.

- Create several locators for the screen element and an algorithm/score for what constitutes a match. It means when one locator breaks, your test doesn’t necessarily fail. The issue with this approach is that your locators become less robust over time with multiple changes, and you won’t know about the problem until it yields the scoring algorithm limit. In most cases, when it does break, you have to fix every single locator one by one.

- As mentioned above, some added ‘smarts’ to change all locators at one click to use the new preferred default locator first.

- Cache web pages, identify a delta where there has been a change, find the references, and try and apply the rules to relocate the field.

- Utilize machine learning to update their locator scoring algorithm using data from all their clients (yes, your test tool vendor may be capturing data about your application and test cases). It applies a single algorithm regardless of the application or user experience being tested, the lowest common denominator.

Image credit: CognitiveScale.com

All the above have in common that, yes, some of them are self-healing, but there is no AI component to this, and where there is machine learning, it’s not specific to the application you wish to test.

These are just programmatic rules, not genuine self-learning features that adjust its algorithm or optimize test cases automatically in a proactive way.

To use a famous Artificial Intelligence in Test Automation example, if you teach an AI image classification system an apple, can it recognize that a picture of an orange is 100% also a fruit, rather than “probably not an apple”?

A further issue is that what if the rules engine or probability algorithm results in tests passing when they should be failing? What if I do want my test to fail if the label changes or the wrong CSS class has been used?

So, What is Artificial Intelligence (AI)?

We need to be very careful when using the term AI. For some companies, it almost seems liberally applied, possibly to dumb down their features for their audience or, more cynically, to improve their company valuation/share price.

Let’s take Google’s definition first:

“noun:

- The theory and development of computer systems can perform tasks usually requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

Hmm. On that basis, every UI test automation tool already has AI, requiring a human to perform visual perception! Equally, that makes Google Translate an AI, too. I’m skeptical. Let’s try another well-used definition:

To paraphrase Wikipedia:

“…Computer science defines AI research as the study of “intelligent agents”: any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. [1] More in detail, Kaplan and Haenlein define AI as “a system’s ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation….”

OK, so this is more like it. When I studied AI at University in the 1980s, it was mainly about natural language processing and understanding syntax and semantics.

Understanding the rules of English will help a computer interpret a well-written text but leave it floundering on a typical Facebook comment or Tweet.

If a Test Automation tool claims to have AI, then we would have to have a tool that learns automatically, doesn’t have to be taught the rules but works them out for itself, and is continually improving, rewriting its rules and classifications.

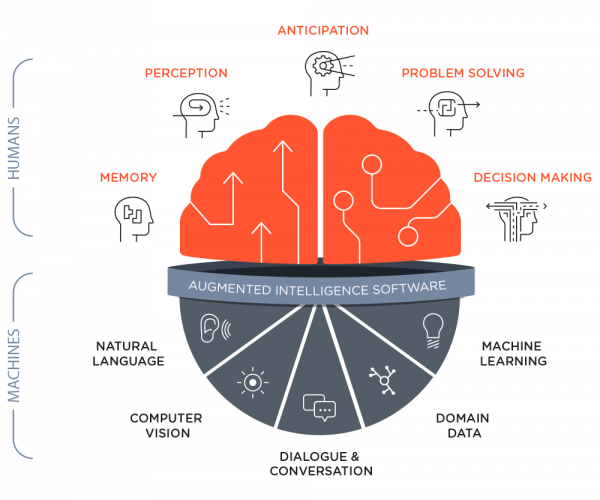

Image credit: Capability Cafe, “Impact of Artificial Intelligence/Machine Learning on Workforce Capability.”

In the case of Salesforce Einstein (and Google’s) Image Recognition, it does this based on thousands of examples. The more examples, the better it recognizes the specific objects that have been categorized already by a human so that it can give a percentage prediction for its recognition when it encounters a new image.

The human brain does the same thing with five possible results: It Is, It Might Be, I Don’t Know, I Don’t Think It Is, and It Isn’t. We don’t always get those rights, of course, especially when it comes to UFOs, and likewise, neither do AI images or speech recognition get it right every time.

Amazon Echo is pretty good, but it is frustrating when you have to change what you repeatedly say to get it to work. To carry on that theme, when I bought my first Echo, I could not get it to play “Songs by Sade,” but I could get it to play “Smooth Operator by Sade.” Now I can do both. It’s either learned or been patched.

Where does all this sit on Provar’s roadmap?

Provar uses metadata to create robust test cases in the first place so that many of the strategies used by other tools are not required. It also means that tests are black and white: there is no ‘probability’ of having the right locator. It is the right locator.

For most of our UI locators, we make any required changes when Salesforce releases its tri-annual updates so that you don’t have to. Even better, we do this within the pre-release window whenever possible so that you have the maximum time to run your regression tests during the Sandbox Preview.

If you’ve used one of our recommended locators based on Salesforce Metadata, your tests keep running and are robust. We also make it easy to create test data on the fly, so you don’t rely on pre-existing values in an org. You don’t need a self-healing tool if your tests are robust already.

Provar uses metadata to create robust test cases in the first place so that many of the strategies used by other tools are not required.

Sometimes, it’s impossible to use a metadata locator, for example, if your developers have used a different UI framework, custom HTML, or for testing non-Salesforce CRM web pages.

So, for those edge cases, we’ve already been working on enhancing our existing Xpath, Label, CSS, Block, and Table locators for generic pages.

It is designed to improve our recommendation of robust locators and for Provar to learn about your Test Strategy so that we can default our recommended locators based on your previous selections.

We even remember the strategies you teach Provar so you can re-apply them across your applications. Unlike other tools, we let you keep these strategies separate, as the strategy for testing Salesforce isn’t necessarily the best for SAP, Workday, or Dynamics 365.

Mutation Testing which could lead to a whole paradigm shift in the way tests are authored to provide a level of exploratory testing.

We’ve also been investigating collaborations with other Salesforce vendor tools to help you better understand test coverage against your specific Salesforce implementation/customizations and to generate test steps automatically (relatively easy for us to do, but hard to create reasonable test steps automatically). Watch this space for some of these features at least six months away.

Finally, we’re keeping a close eye on concepts such as Mutation Testing, which could lead to a paradigm shift in how tests are authored and how they can evolve automatically to provide a level of exploratory testing.

It may seem like science fiction, but so were self-driving cars and having a conversation with a computer just ten years ago.

We don’t consider this to be Artificial Intelligence. We call it common sense to enhance our user’s experience, but if you’re comparing different tools, then OK, call it AI if you insist.

So Are There Any Real AI Test Tools out there?

Some frameworks are beginning to show some accurate self-learning and predictive testing elements. Frameworks that can generate tests for you or identify which changes may be breaking changes vs. cosmetic.

I use the term frameworks deliberately. If you have coded test automation and are happy to have the overhead to maintain that code base, then there is some very cool tech that includes a level of machine learning.

![]()

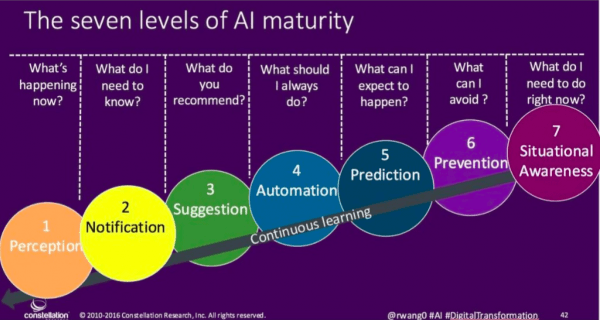

Image credit: ca.com, “Artificial intelligence and continuous testing: It’s the next big thing (really).”

Regarding no-code solutions such as Provar, I’m yet to see anything more than a rules engine you have to ‘program’ or a generic locator algorithm.

Indeed, I’ve seen no other tools that can handle the changeover from user experiences, such as Salesforce Classic to Lightning, with test cases that run seamlessly on both UIs plus keep running without making changes to your test case across multiple releases.

At Provar, we always made it our mission to help Salesforce customers create and maintain robust test cases for their UI and API tests. We accomplished this in the following ways:

- We leverage the Salesforce metadata to identify screen elements and field values automatically.

- Automatically recognize the page you want to test and automatically build all the required navigation steps.

- We leverage the Salesforce API to interact directly with the underlying data model.

- You are suggesting alternate locators and allowing you to override our recommendations.

- It can build reusable test cases across Salesforce UIs such as Classic, Lightning, Mobile, and Communities.

- Continuous improvement to keep synch with the latest Salesforce, Selenium Webdriver, and multiple browser vendors.

- We provide in-built Provar APIs and a custom API to extend capabilities beyond Salesforce to perform meaningful end-to-end test cases.

What tools like Provar have delivered, and continue to deliver, is Artificial intelligence in Test Automation and robust test scripts integrated into an enterprise’s Continuous Integration and Continuous Delivery processes. It’s natural, has rapid ROI, and it’s relatively easy to achieve. It’s intelligent, but there’s nothing artificial about it!